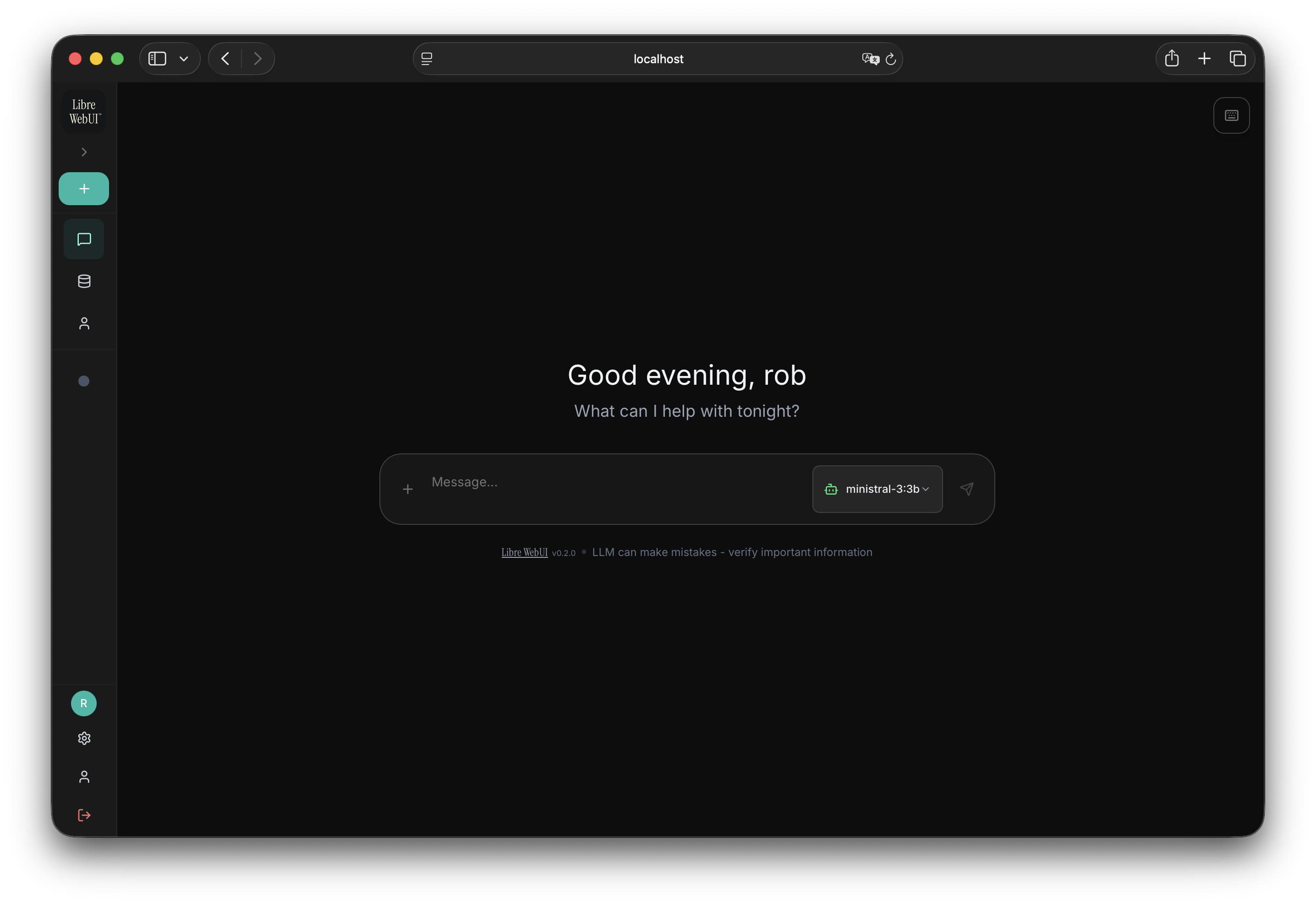

Your AI, Your Rules

Privacy-first AI chat interface. Run locally with Ollama or connect to OpenAI, Anthropic, and 9+ providers. Zero telemetry. Zero tracking.

npx libre-webui

Requires Node.js 18+ and Ollama for local AI

Everything You Need

A complete AI chat solution that respects your privacy

Local & Cloud AI

Run models locally with Ollama or connect to OpenAI, Anthropic, Groq, Gemini, Mistral, and more. Your choice.

Document Chat (RAG)

Upload PDFs, docs, and text files. Ask questions about your documents with semantic search and vector embeddings.

Interactive Artifacts

Render HTML, SVG, and React components directly in chat. Live preview with full-screen mode.

AES-256 Encryption

Enterprise-grade encryption for all your data. Chat history, documents, and settings are encrypted at rest.

Custom Personas

Create AI personalities with unique behaviors and system prompts. Import/export personas as JSON.

Text-to-Speech

Listen to AI responses with multiple voice options. Supports browser TTS and ElevenLabs integration.

Keyboard Shortcuts

VS Code-inspired shortcuts for power users. Navigate, toggle settings, and control everything from the keyboard.

Multi-User Support

Role-based access control with SSO support. GitHub and Hugging Face OAuth built-in.

Connect to Any Provider

One interface, unlimited possibilities

Get Started in Seconds

Choose your preferred installation method

Create Custom Plugins

Connect any OpenAI-compatible LLM with a simple JSON file

Available Plugins

Official plugins from the Libre WebUI repository. Click to view or download.

{

"id": "custom-model",

"name": "Custom Model",

"type": "completion",

"endpoint": "http://localhost:8000/v1/chat/completions",

"auth": {

"header": "Authorization",

"prefix": "Bearer ",

"key_env": "CUSTOM_MODEL_API_KEY"

},

"model_map": [

"my-fine-tuned-llama"

]

}Create Your Own Plugin

Start Your LLM Server

Run any OpenAI-compatible server: llama.cpp, vLLM, Ollama, or a custom FastAPI server.

Create Plugin JSON

Define your endpoint, authentication, and available models in a simple JSON file.

Upload to Libre WebUI

Go to Settings > Providers, upload your plugin, and enter your API key.

Start Chatting

Your custom models appear in the model selector. Full privacy, full control.

Plugin Fields Reference

id

Unique identifier (lowercase, hyphens allowed)

name

Display name shown in the UI

type

"completion" for chat, "tts" for text-to-speech

endpoint

API URL (e.g., /v1/chat/completions)

auth.header

Auth header name (Authorization, x-api-key)

auth.prefix

Key prefix ("Bearer " or empty)

auth.key_env

Environment variable for your API key

model_map

Array of available model identifiers

Ready to Own Your AI?

Join thousands of users who value privacy and control.